41 soft labels deep learning

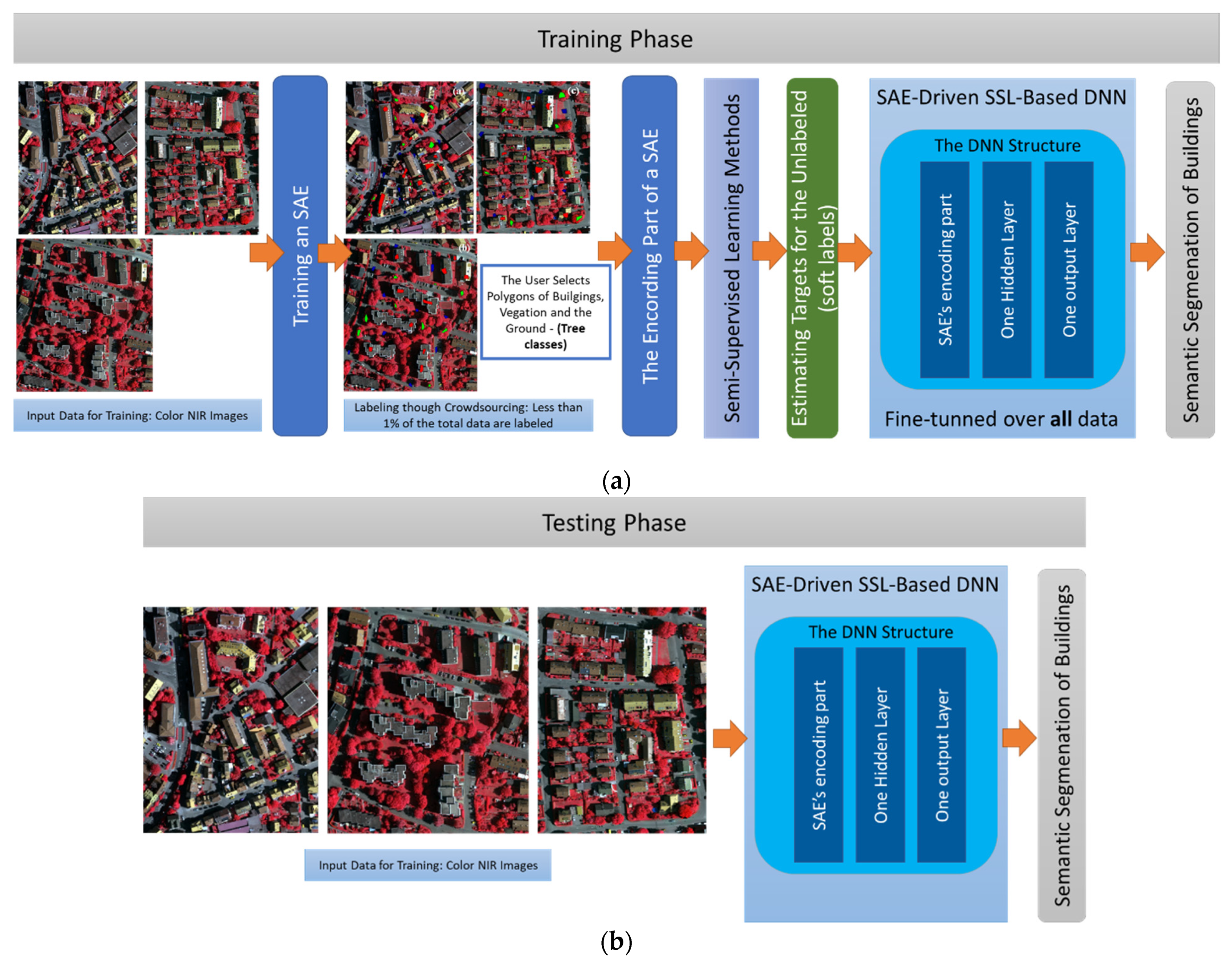

Soft Labels and Supervised Image Classification (DRAFT Oct 7, 2021 ... Machine learning is used daily in areas such as security, medical care, and financial systems. Failures in such institutions can have dire ... How To Label Data For Semantic Segmentation Deep Learning Models ... Image segmentation deep learning can gather accurate information of such fields that helps to monitor the urbanization and deforestation through images taken from satellites or autonomous flying ...

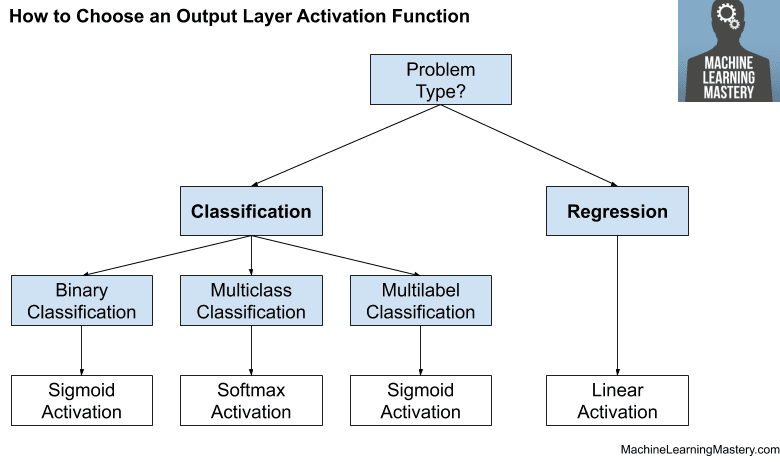

Softmax Classifiers Explained - PyImageSearch Understanding Multinomial Logistic Regression and Softmax Classifiers. The Softmax classifier is a generalization of the binary form of Logistic Regression. Just like in hinge loss or squared hinge loss, our mapping function f is defined such that it takes an input set of data x and maps them to the output class labels via a simple (linear) dot ...

Soft labels deep learning

Understanding Deep Learning on Controlled Noisy Labels - Google AI Blog In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ... Label-Free Quantification You Can Count On: A Deep Learning ... - Olympus Although it shows excellent correspondence between the two methods, the total number of objects detected with deep learning was around 3% higher. Figure 2: Nuclei detected using fluorescence (left), the corresponding brightfield image (middle), and object shape predicted by deep learning technology (right). An Introduction to Confident Learning: Finding and Learning with Label ... cleanlab is a framework for machine learning and deep learning with label errors like how PyTorch is a framework for deep learning. ... ImageNet train set identified using confident learning. Label Errors are boxed in red. ... et al. (2013); van Rooyen et al. (2015); Patrini et al. (2017), using soft-pruning via loss-reweighting, to avoid the ...

Soft labels deep learning. subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2019-ICML - Combating Label Noise in Deep Learning Using Abstention. 2019-ICML - SELFIE: Refurbishing unclean samples for robust deep learning. 2019-ICASSP - Learning Sound Event Classifiers from Web Audio with Noisy Labels. ... 2020-ICPR - Meta Soft Label Generation for Noisy Labels. 2020-IJCV ... Pseudo Labelling - A Guide To Semi-Supervised Learning There are 3 kinds of machine learning approaches- Supervised, Unsupervised, and Reinforcement Learning techniques. Supervised learning as we know is where data and labels are present. Unsupervised Learning is where only data and no labels are present. Reinforcement learning is where the agents learn from the actions taken to generate rewards. A semi-supervised learning approach for soft labeled data Abstract: In some machine learning applications using soft labels is more useful and informative than crisp labels. Soft labels indicate the degree of membership of the training data to the given classes. Often only a small number of labeled data is available while unlabeled data is abundant. Learning Soft Labels via Meta Learning The learned labels continuously adapt themselves to the model's state, thereby providing dynamic regularization. When applied to the task of supervised image-classification, our method leads to consistent gains across different datasets and architectures. For instance, dynamically learned labels improve ResNet18 by 2.1% on CIFAR100.

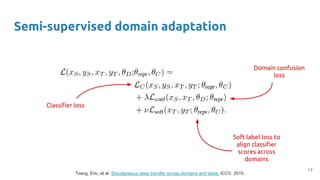

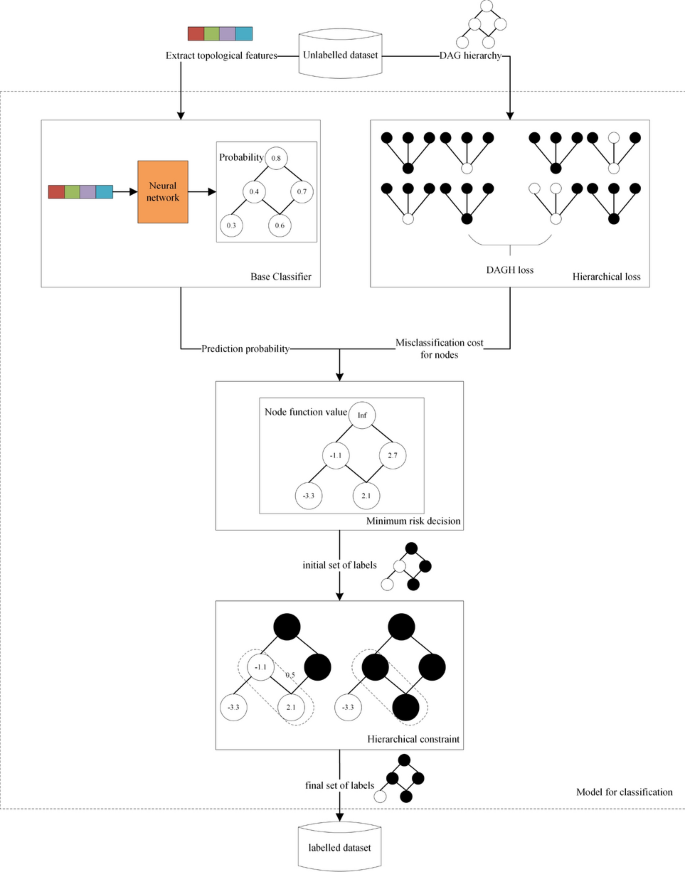

A Soft-Labeled Self-Training Approach - CNRS aims to show that a self-training approach with soft-labeling ... were taken from UCI Machine Learning Repository [11], all having 2 classes. How to make use of "soft" labels in binary classification - Quora If you're in possession of soft labels then you're in luck, because you have more information about the ground truth that you would from binary labels alone: you have the true class and its degree. For one, you're entitled to ignore the soft information and treat the problem as a bog-standard classification. Label Propagation for Deep Semi-Supervised Learning Pseudo-labelling methods generate labels for unlabelled data to guide learning [18]. To generate pseudo-labels, [5] leverages the sample similarity in the feature space to assign soft labels ... MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) network, which is called MetaLabelNet. Following, base classifier is trained by using these generated soft-labels. These iterations are repeated for each batch of training data.

What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels. Label Smoothing: An ingredient of higher model accuracy Jun 23, 2019 ... These are soft labels, instead of hard labels, that is 0 and 1. This will ultimately give you lower loss when there is an incorrect prediction, ... How to map softMax output to labels in MXNet - Stack Overflow 1 In Deep learning the predictions are often encoded using one hot vector. I am using MXNet for creating a simple Neural Network which classifies images of animals as cats,dogs,horses etc. When I call the Predict method of MXNet it returns me a softmax output. Weakly Supervised Medical Image Segmentation With Soft Labels and Noise ... Weakly Supervised Medical Image Segmentation With Soft Labels and Noise Robust Loss Banafshe Felfeliyan, Abhilash Hareendranathan, Gregor Kuntze, Stephanie Wichuk, Nils D. Forkert, Jacob L. Jaremko, Janet L. Ronsky Recent advances in deep learning algorithms have led to significant benefits for solving many medical image analysis problems.

Soft-Label Dataset Distillation and Text Dataset Distillation Using `soft' labels also enables distilled datasets to consist of fewer samples than there are classes as each sample can encode information for multiple classes. For example, training a LeNet model with 10 distilled images (one per class) results in over 96% accuracy on MNIST, and almost 92% accuracy when trained on just 5 distilled images.

Label Smoothing — Make your model less (over)confident Label smoothing is often used to increase robustness and improve classification problems. Label smoothing is a form of output distribution regularization that prevents overfitting of a neural network by softening the ground-truth labels in the training data in an attempt to penalize overconfident outputs. The intuition behind label smoothing is ...

Learning with not Enough Data Part 1: Semi-Supervised Learning Xie et al. (2020) applied self-training in deep learning and achieved great results. On the ImageNet classification task, ... One is to adopt MixUp with soft labels. Given two samples, $(\mathbf{x}_i, \mathbf{x}_j)$ and their corresponding true or pseudo labels $(y_i, y_j)$, the interpolated label equation can be translated to a cross entropy ...

(PDF) Deep learning with noisy labels: Exploring techniques and ... Supervised training of deep learning models requires large labeled datasets. There is a growing interest in obtaining such datasets for medical image analysis applications. However, the impact of...

Validation of Soft Labels in Developing Deep Learning Algorithms for ... Validation of Soft Labels in Developing Deep Learning Algorithms for Detecting Lesions of Myopic Maculopathy From Optical Coherence Tomographic Images The predicted possibilities from the models trained by soft labels were close to the results made by myopia specialists.

Rethinking Soft Labels for Knowledge Distillation: A Bias-Variance ... Computer Science > Machine Learning. arXiv:2102.00650 (cs). [Submitted on 1 Feb 2021]. Title:Rethinking Soft Labels for Knowledge Distillation: A ...

A radical new technique lets AI learn with practically no data With carefully engineered soft labels, even two examples could theoretically encode any number of categories. "With two points, you can separate a thousand classes or 10,000 classes or a million ...

What is Label Smoothing?. A technique to make your model less… | by ... Label smoothing is used when the loss function is cross entropy, and the model applies the softmax function to the penultimate layer's logit vectors z to compute its output probabilities p. In this setting, the gradient of the cross entropy loss function with respect to the logits is simply ∇CE = p - y = softmax (z) - y

Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability

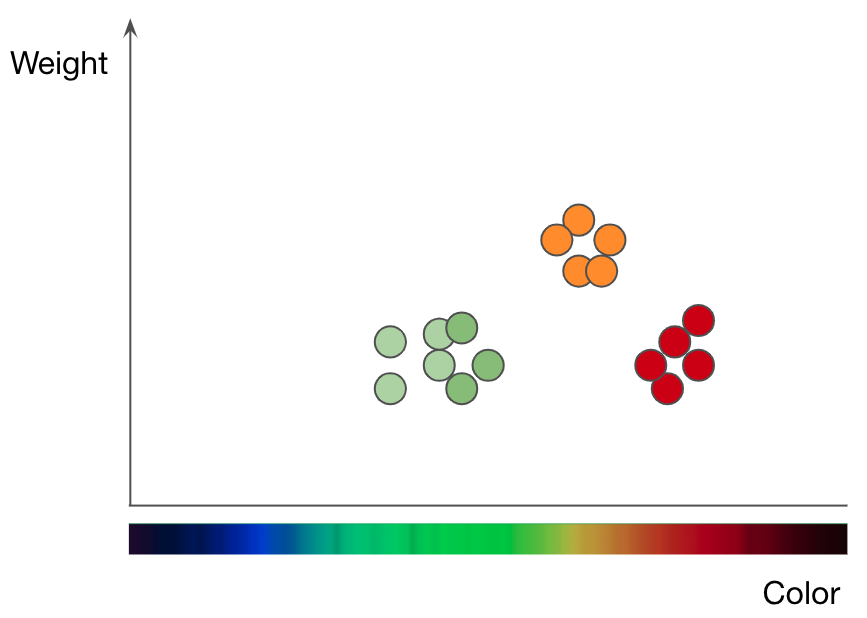

How to solve Classification Problems in Deep Learning with ... - Medium Types of Label Encoding. In general, we can use different encodings for true (actual) labels (y values):. a floating number (e.g. in binary classification: 1.0 or 0.0). cat → 0.0; dog → 1.0 ...

Weakly Supervised Medical Image Segmentation With Soft Labels and Noise ... Soft labels can provide additional information to the learning algorithm, which was shown to reduce the number of instances required to train a model [ 31]. Label smoothing techniques can be considered as utilization of probabilistic labels (soft labels).

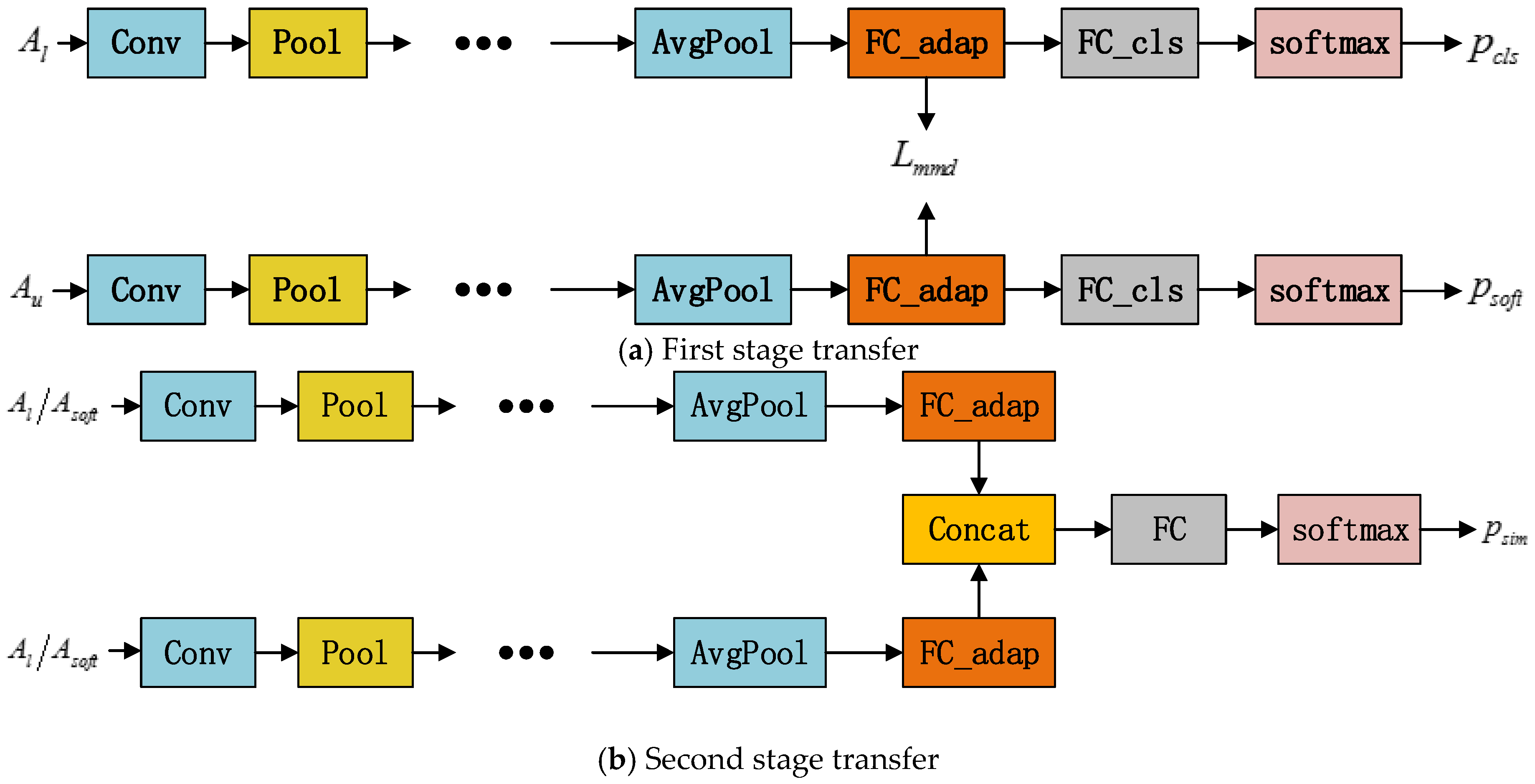

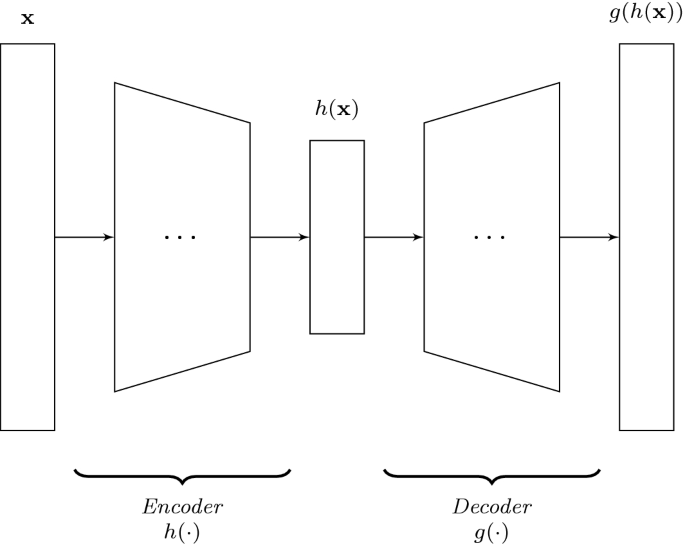

Unsupervised deep hashing through learning soft pseudo label for remote ... We design a deep auto-encoder network SPLNet, which can automatically learn soft pseudo-labels and generate a local semantic similarity matrix. The soft pseudo-labels represent the global similarity between inter-cluster RS images, and the local semantic similarity matrix describes the local proximity between intra-cluster RS images. 3.

MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels Mar 19, 2021 ... Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) ...

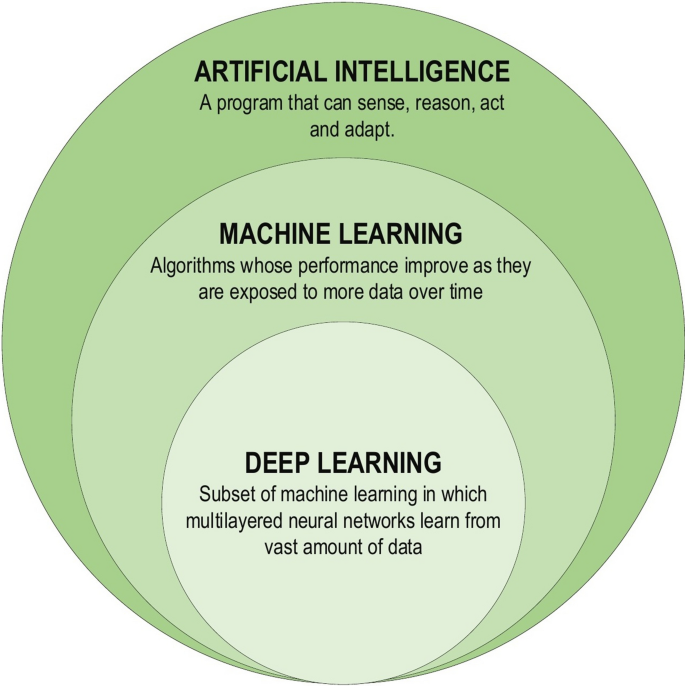

What is Deep Learning? | IBM Deep learning is a subset of machine learning, which is essentially a neural network with three or more layers. These neural networks attempt to simulate the behavior of the human brain—albeit far from matching its ability—allowing it to "learn" from large amounts of data. While a neural network with a single layer can still make ...

Multi-Class Neural Networks: Softmax | Machine Learning - Google Developers Candidate sampling means that Softmax calculates a probability for all the positive labels but only for a random sample of negative labels. For example, if we are interested in determining whether...

Loss and Loss Functions for Training Deep Learning Neural Networks Almost universally, deep learning neural networks are trained under the framework of maximum likelihood using cross-entropy as the loss function. Most modern neural networks are trained using maximum likelihood. This means that the cost function is […] described as the cross-entropy between the training data and the model distribution.

Learning classification models with soft-label information - PMC - NCBI Nov 20, 2013 ... Briefly, standard classification algorithms (eg, logistic regression, support vector machines (SVMs)) use only class labels, and do not accept ...

An Introduction to Confident Learning: Finding and Learning with Label ... cleanlab is a framework for machine learning and deep learning with label errors like how PyTorch is a framework for deep learning. ... ImageNet train set identified using confident learning. Label Errors are boxed in red. ... et al. (2013); van Rooyen et al. (2015); Patrini et al. (2017), using soft-pruning via loss-reweighting, to avoid the ...

Label-Free Quantification You Can Count On: A Deep Learning ... - Olympus Although it shows excellent correspondence between the two methods, the total number of objects detected with deep learning was around 3% higher. Figure 2: Nuclei detected using fluorescence (left), the corresponding brightfield image (middle), and object shape predicted by deep learning technology (right).

Understanding Deep Learning on Controlled Noisy Labels - Google AI Blog In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ...

![What Is Transfer Learning? [Examples & Newbie-Friendly Guide]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/627d125248f5fa07e1faf0c6_61f54fb4bbd0e14dfe068c8f_transfer-learned-knowledge.png)

Post a Comment for "41 soft labels deep learning"